What benchmarks or baselines are useful when evaluating AI search performance?

- Also asked as:

- What are the key AI search metrics?

- How do I benchmark AI search performance?

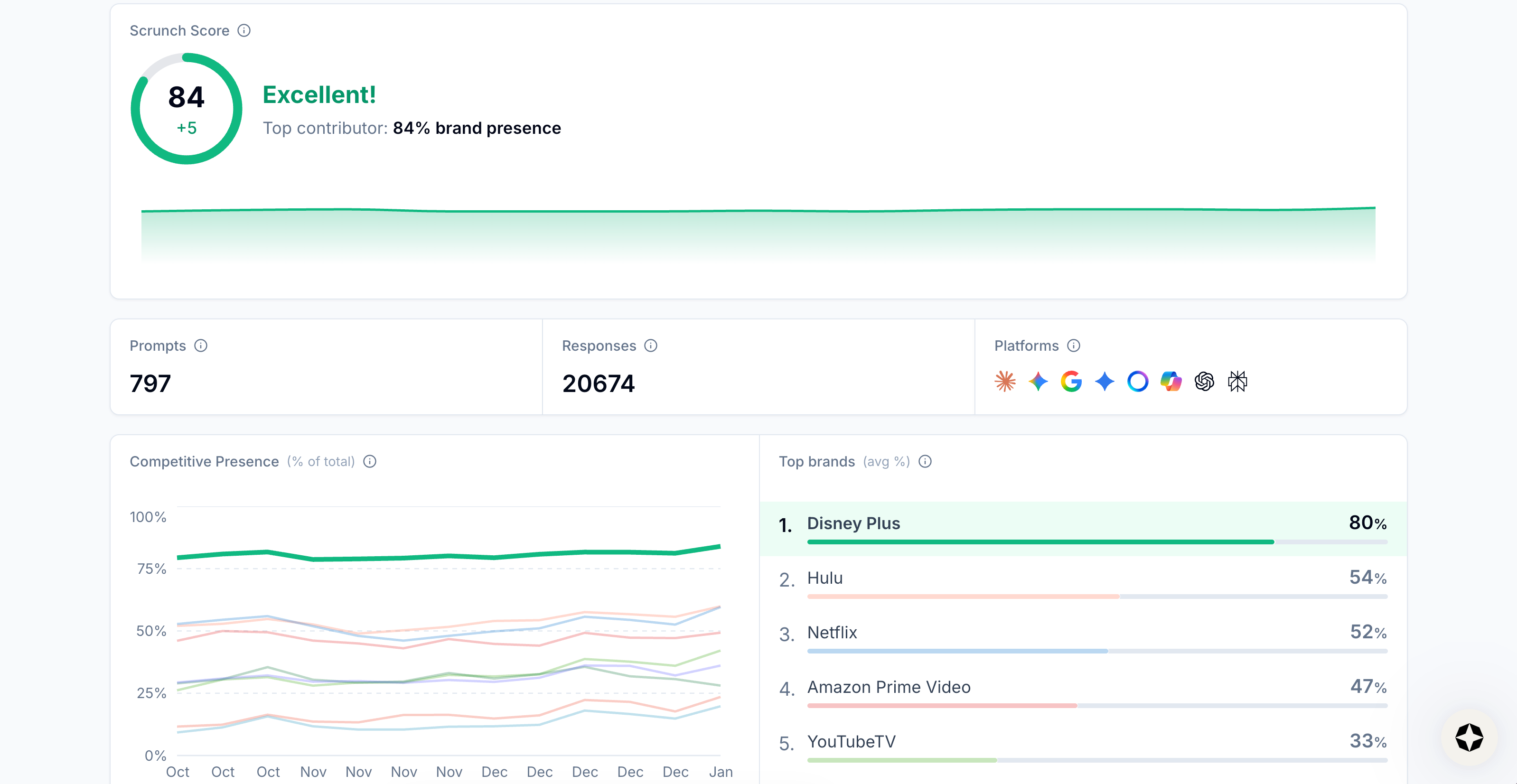

Scrunch recommends tracking brand presence, citations, referral traffic, AI agent traffic, and share of voice versus competitors as key performance indicators.

Example

For example, a Scrunch user establishing initial benchmarks and baselines should measure:

Brand presence

Brand mentions and citations in AI responses across platforms, as well as by persona, country, topic, individual platform, funnel stage, or custom tag.

Citations

How often their brand is cited in AI responses to business-relevant prompts, as well as which sources are most frequently cited for the prompts they care about.

Referral traffic

Website traffic from AI responses to target prompts.

AI traffic

How often LLMs send agents to access content on their website for training, indexing, and retrieval purposes.

Share of voice vs. competitors

Brand presence and citations versus competitors for key prompts.

Follow-up question: What are realistic target benchmarks for AI search?

There aren’t universal benchmarks for AI search, but in order to set realistic targets, Scrunch recommends:

Starting with current baseline

Measure existing performance across business-relevant prompts and aim for gradual improvement.

Comparing to competitors

Identify top competitors that consistently appear in AI responses to target prompts and try to gain share of voice.

Focusing on high-priority topics

Select a small number of key areas to improve performance in and track improvement over multiple weeks.